Automated Reinforcement Learning: Exploring Meta-Learning, AutoML, and LLMs

July, 2024 // ICML Workshop

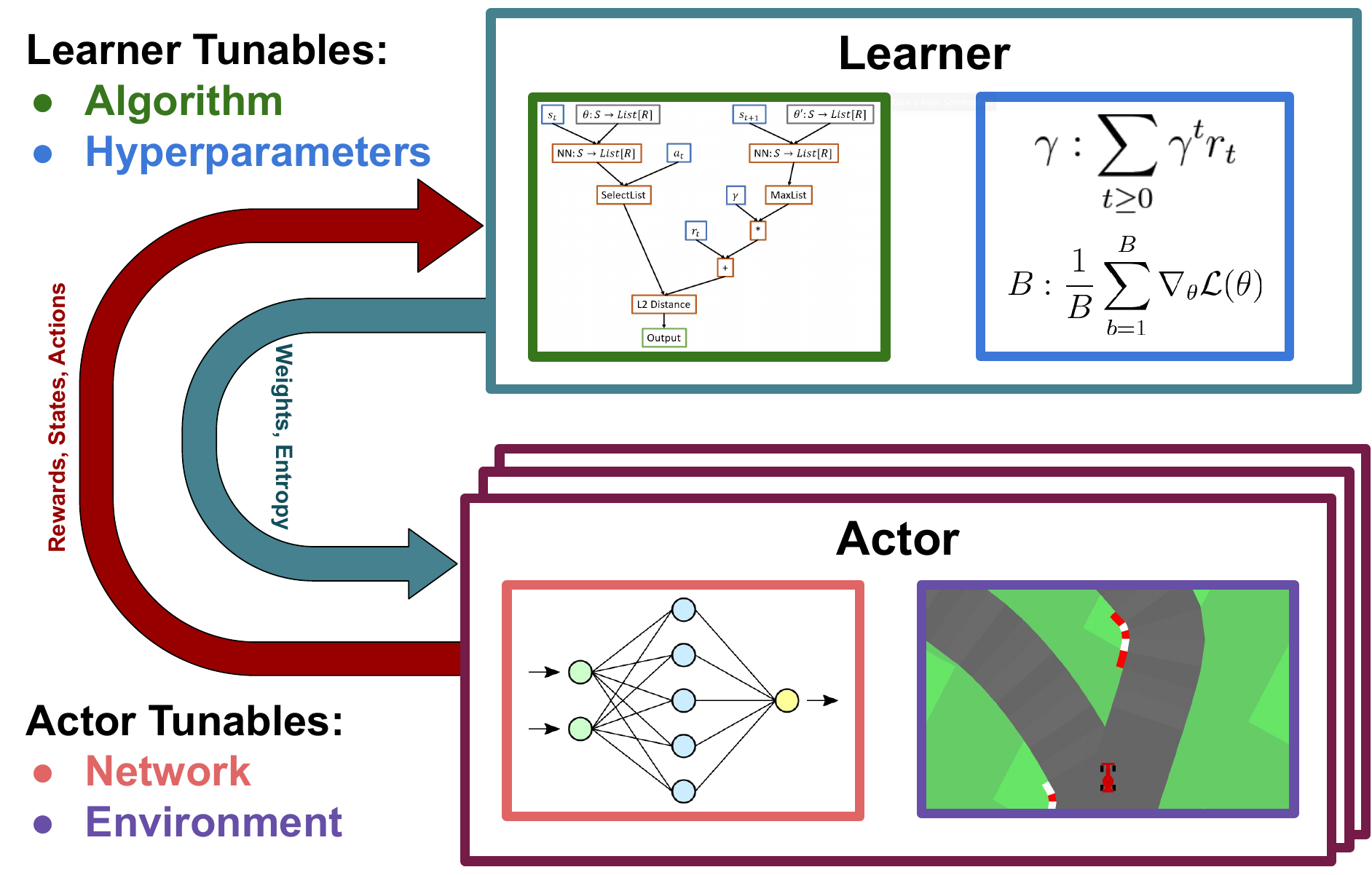

The past few years has seen a surge of interest in reinforcement learning, , with breakthrough successes of applying RL in games, robotics, chemistry, logistics, nuclear fusion and more. These headlines, however, blur the picture of what remains a brittle technology, with many successes relying on heavily engineered solutions. Indeed, several recent works have demonstrated that RL algorithms are brittle to seemingly mundane design choices . Thus, it is often a significant challenge to effectively apply RL in practice, especially on novel problems, limiting its potential impact and narrowing its accessibility.

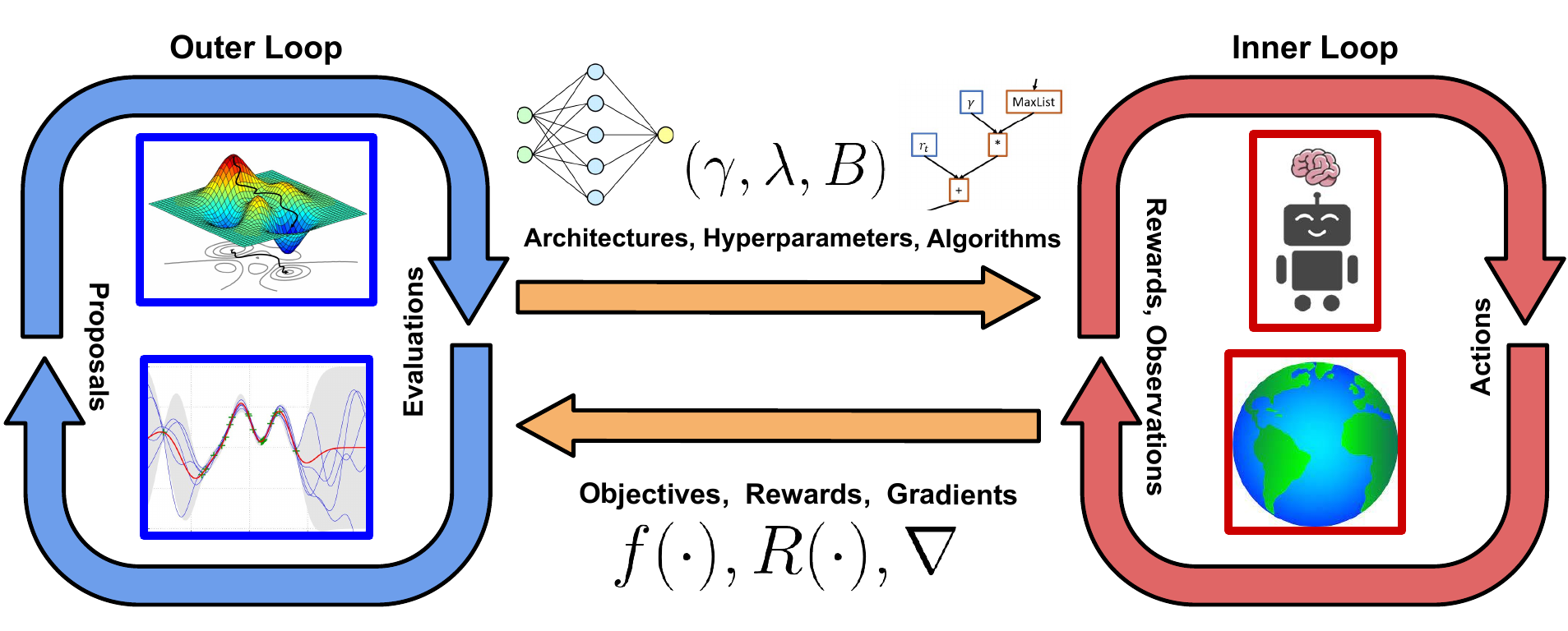

In this workshop, we want to bring together different communities working on solving these problems. A variety of distinct sub-communities spanning RL, Meta-Learning and AutoML have been working on making RL work out-of-the-box in arbitrary settings - this is the AutoRL setting. Recently, with the emergence of LLMs and their in-context learning abilities, they have significantly impacted all these communities. There are LLM agents tackling traditional RL tasks as well as few-shot RL agents increasing efficiency and generalization that are also trying to automate RL. LLMs have also been influencing AutoML directly with papers such as OptFormer . However, there is currently little crossover between these communities. As such, we want to create the space to connect them and cross-pollinate ideas automating RL. We believe closer connections between these communities will ultimately lead to faster and more focused progress on AutoRL and an in-person workshop is the ideal way to allow for greater interaction between them. Through a mixture of diverse expert talks and opportunity for conversation, we hope to emphasize the many facets of current AutoRL approaches and where collaboration across fields is possible.

Update:

The workshop has been accepted at ICML 2024 to be held in Vienna. Location: Stolz 0, Messe Wien Exhibition Congress Center. Date: 27 July 2024.Contact: autorlworkshop@ai.uni-hannover.de

Accepted papers

Accepted papers can be found here. Camera-ready versions of all the papers will soon be available on OpenReview. We also want to highlight three excellent Spotlight Papers:Can Learned Optimization Make Reinforcement Learning Less Difficult?

Alexander D. Goldie, Chris Lu, Matthew Thomas Jackson, Shimon Whiteson, Jakob Nicolaus FoersterBOFormer: Learning to Solve Multi-Objective Bayesian Optimization via Non-Markovian RL

Yu Heng Hung, Kai-Jie Lin, Yu-Heng Lin, Chien-Yi Wang, Ping-Chun HsiehSelf-Exploring Language Models: Active Preference Elicitation for Online Alignment

Shenao Zhang, Donghan Yu, Hiteshi Sharma, Ziyi Yang, Shuohang Wang, Hany Hassan Awadalla, Zhaoran WangOfficial schedule

All times listed below are in Central European Summer Time (CEST).

| 9:00 - 9:30 AM |

Invited talk 1: tba Chelsea Finn |

| 9:30 - 9:45 AM |

Contributed talk 1: Self-Exploring Language Models: Active Preference Elicitation for Online Alignment Shenao Zhang |

| 9:45 - 10:00 AM |

Contributed talk 2: BOFormer: Learning to Solve Multi-Objective Bayesian Optimization via Non-Markovian RL Yu-Heng Hung and Ping-Chun Hsieh |

| 10:00 - 11:00 AM | Poster Session |

| 11:00 - 11:30 AM |

Invited Talk 2: Learning to Solve New sequential decision-making Tasks with In-Context Learning + work on generalization in offline RL Roberta Raileanu |

| 11:30 - 12:00 PM |

Invited Talk 3: AI-Assisted Agent Design with Large Language Models and Reinforcement Learning Pierluca D'Oro |

| 12:00 - 12:30 PM |

Breakout Session X |

| 12:30 - 2:00 PM | Lunch Break |

| 2:00 - 2:30 PM |

Invited talk 4: Michael Dennis |

| 2:30 - 2:45 PM |

Contributed Talk 3: Can Learned Optimization Make Reinforcement Learning Less Difficult? Alexander D. Goldie |

| 2:45 - 3:15 PM |

Invited talk 5: In defense of Atari: The ALE as a benchmark for AutoRL Pablo Samuel Castro |

| 3:30 - 4:00 PM |

Coffee Break |

| 4:00 - 5:00 PM | Panel Discussion & Closing Remarks Pablo Samuel Castro, Jacob Beck, Xingyou (Richard) Song, Alexander D. Goldie. |

Important Dates (currently all provisional)

| Paper Submission Deadline | 31.05.2024 AOE |

| Decision Notifications | 17.06.2024 |

| Camera Ready Paper Deadline | 1.07.2024 |

| Paper Video Submission Deadline | 8.07.2024 |

| Workshop | 27.07.2024 |

Call for Papers

We invite both short (4 page) and long (9 page) anonymized submissions that develop algorithms, benchmarks, and ideas to allow reinforcement learning agents to learn more effectively out of the box. Submissions should be in the NeurIPS LaTeX format. We also welcome review and positional papers that may foster discussions. Note that as per ICML guidelines, we don't accept works previously published in other conferences on machine learning, but are open to works that are currently under submission to a conference.

The workshop will focus on novel and unpublished work including, but not limited to, the areas of:

- LLMs for reinforcement learning

- In-context reinforcement learning

- Meta-reinforcement learning

- RL algorithm discovery

- Fairness & interpretability via AutoRL

- Curricula and open-endedness in RL

- AutoML for reinforcement learning

- Reinforcement learning for LLMs

- NAS for deep reinforcement learning

- Theoretical guarantees for AutoRL

- Feature- & Hyperparameter importance for RL algorithms

- Demos of AutoRL systems

- Hyperparameter agnostic RL algorithms

Papers should not exceed 9 pages in length, excluding references and appendices. All of these should be submitted in a single file via OpenReview. The review process will be double blind. We will not have archival proceedings, but will share accepted papers on the workshop website. We encourage including code in papers, though we ask to anonymize the code along with the submission. Any paper that includes code will receive a code badge on the workshop website. We require a short summary video of at most 5 minutes for accepted papers. These will be uploaded to YouTube.

If you are interested in reviewing for the workshop, please get in touch through this Google form.

Key Dates

- Paper submission deadline: 31.05.2024 AOE

- Decision notifications by: 17.06.2024

- Camera ready version due: 01.07.2024

- Video deadline: 08.07.2024

Speakers

DeepMind, Université de Montréal and Mila

DeepMind

Mila, Meta

Meta

Stanford University

Panel Discussion

DeepMind, Université de Montréal and Mila

DeepMind

University of Oxford

University of Oxford

Organizers

Leibniz Universität Hannover

University of Freiburg

University of Freiburg

Amazon Research

DeepMind

RWTH Aachen University